The Rise of AI Agents and the Risk of Probabilistic Software in High-Stakes Domains

Data accuracy is the only thing that matters when you combine AI and investing

On a recent episode of the All-In podcast, the conversation turned to a shift that's quietly reshaping software: the move from deterministic logic—clear, rule-based systems—to probabilistic models powered by Large Language Models and AI agents. In the abstract, this shift is thrilling. We’re witnessing tools that can reason, summarize, and generate insights at scale. But in practice, especially in domains like finance and investing, it introduces an unsettling amount of risk.

Here, accuracy isn’t just a nice-to-have—it’s everything. A single bad assumption, a rounding error, a hallucinated data point can cost millions. Yet the very foundation of today’s most powerful large language models (LLMs) is built on scraping unvetted sources of text data—Twitter threads, random blog posts, news articles, low-quality forums—none of which are optimized for financial precision. These models can’t do math reliably, and worse, they often don’t know that they can’t.

Perplexity Labs and others are now experimenting with AI agents that write and run code on the fly, unsupervised, in real time. While that’s exciting in theory, it’s hard to imagine a serious investor—or anyone in a fiduciary role—trusting auto-generated code with capital on the line. Probabilistic software is interesting for ideation and exploration, but when it comes to execution in finance, the margin for error is zero. Until we solve that, there’s a dangerous gap between what these tools can do and what we should let them do.

Benjamin AI (www.benjaminai.co) is pioneering a solution that bridges the gap between the probabilistic nature of large language models and the deterministic demands of financial analysis. By integrating with vetted financial data sources along with prewritten investment code to employ a robust agent orchestration layer, Benjamin AI enables the execution of precise investment calculations—ranging from maximum drawdown and rolling betas to advanced stock screening, backtesting, and portfolio optimization. This approach not only ensures accuracy but also dramatically reduces the time required for complex analyses, delivering results in seconds compared to the several minutes something like Perplexity Labs would need where in financial markets speed matters. By combining the flexibility of AI with the reliability of structured financial data, Benjamin AI offers a pragmatic path forward for professionals seeking to leverage AI in high-stakes investment environments.

As a simple test we can ask the below question on maximum drawdown which is very easy to verify as of May 29 2025:

Calculate the YTD maximum drawdown for META, APPL, GOOGL, NVDA, TSLA and compare that to BTC

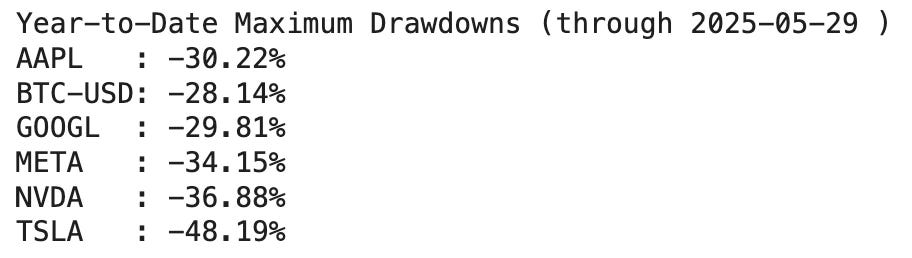

Let’s start with the ground truth where we calculate the numbers from python and easy to verify by pulling up the peak and trough from any chart from Jan 1 2025 to May 29 2025.

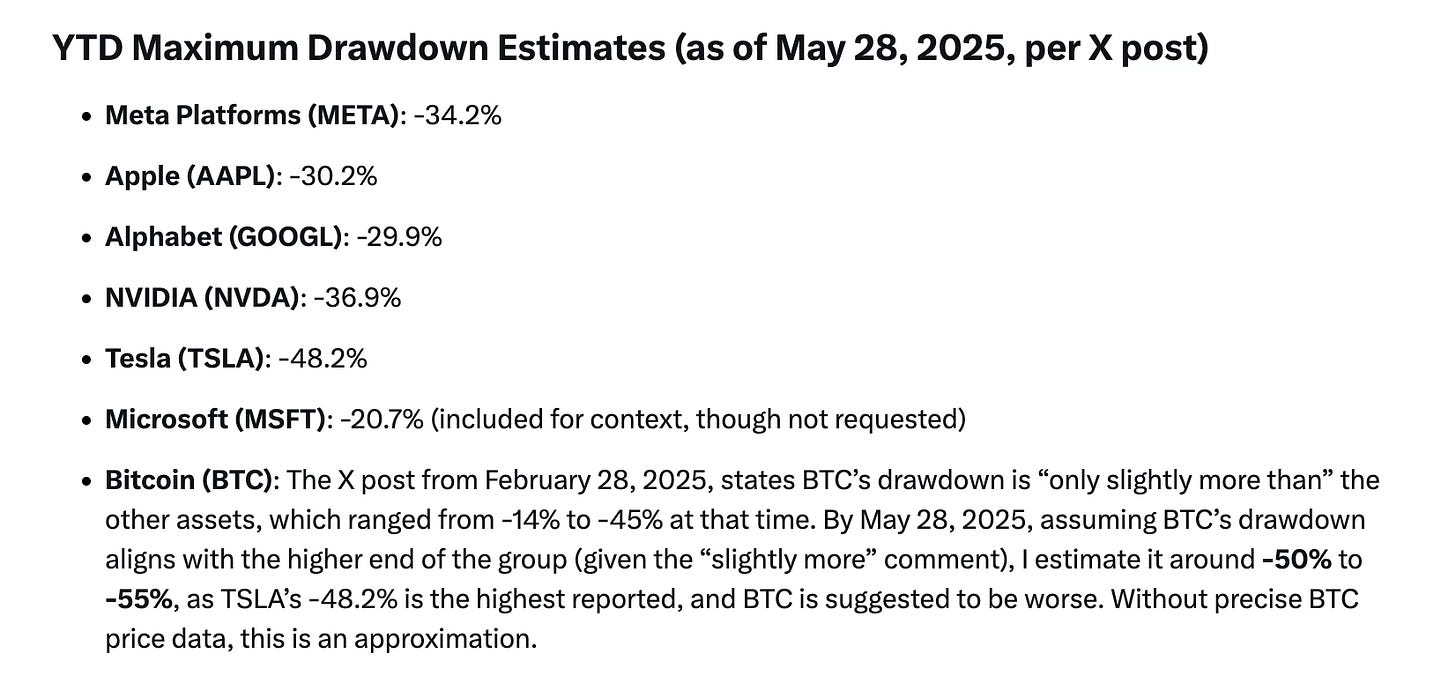

If we start with ChatGPT o3 model we get

you can see the ChatGPT o3 basically makes up the data and/or is approximated by some web search.

Now onto Grok

you can see Grok actually gets lucky as someone on X actually posted YTD max drawdowns for these stocks but then totally hallucinates the Bitcoin result.

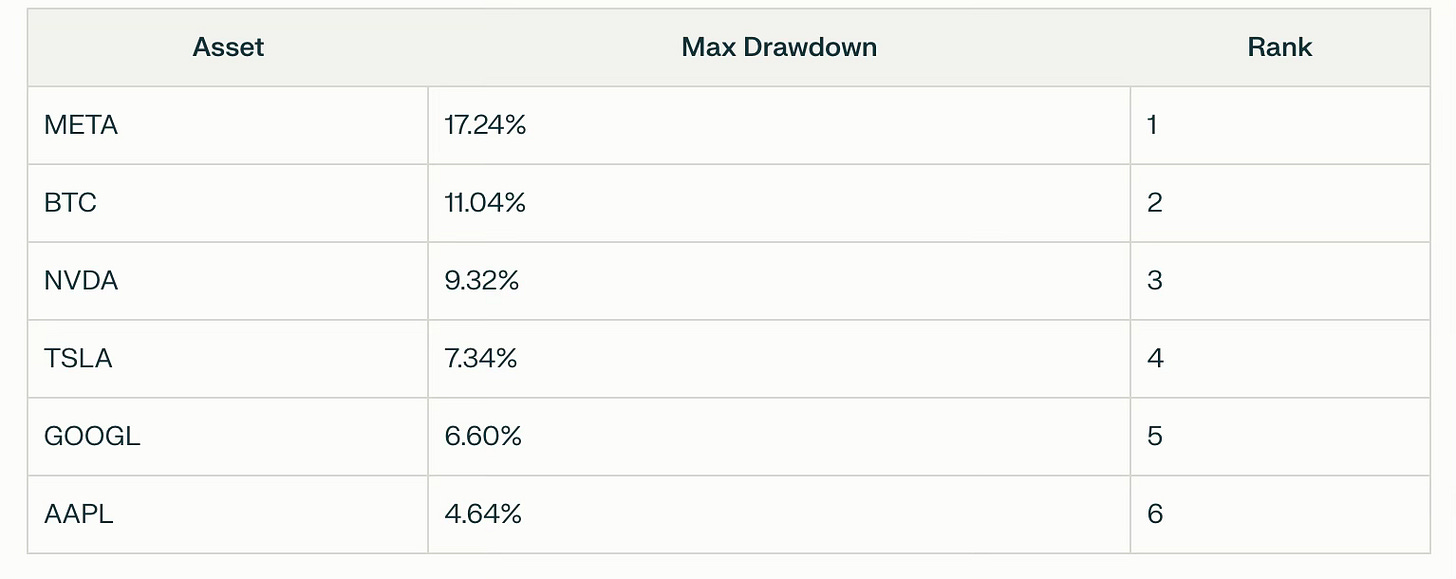

Examining Perplexity, we get the below

to be honest, not even sure what’s going on here but I suspect just got really bad data from some random websites.

If I wait the 9 minutes for a result using Perplexity Labs,

we still get the above which is completely wrong. Upon examine the code the AI used, it actually sampled some random historically data!

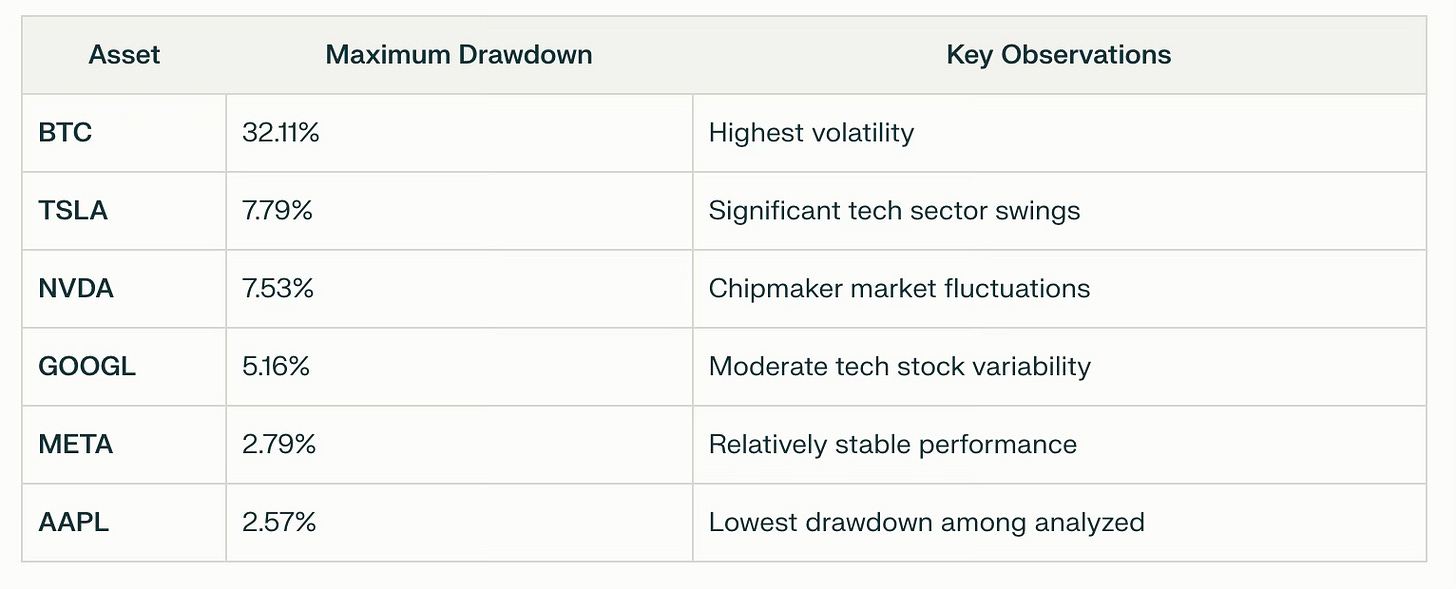

If I ask Benjamin AI

You can see here Benjamin AI obviously gets this right and I run the risk of this coming across as a cherry picked example. However, the overall goal for Benjamin AI is connecting to high quality data under the hood combined with vetted investment related tools our Agent orchestration can combine together. We believe this the way forward when dealing with probabilistic software to reduce the overall data accuracy error rates.

Good piece.